Busting RESTful GIS myths

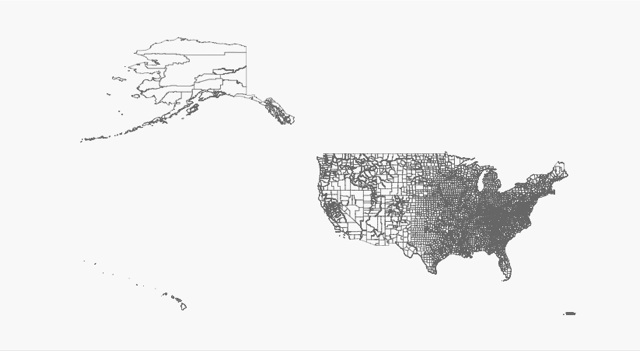

I'm going to use the announcement of Nanaimo's "authentic Web" GIS as an occasion to debunk some myths about REST and the Web, and their fitness for designing alternatives to the OGC's service architecture, that surfaced on Twitter last week.

Myth: RESTful Web services aren't based on standards.

Indeed, there are APIs on the programmable web touting "REST" which are very unlike each other. Not all of them are even RESTful when you get right down to it. They come from different and varying domains. It's understandable that a quick glance leaves some with the mistaken impression that interoperability is not a property of RESTful Web services.

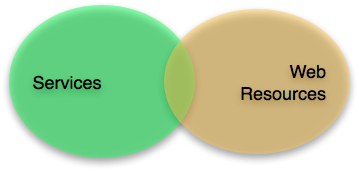

First, and this can't be said enough, because it still isn't really understood in the GIS community: REST is a particularly constrained style of architecture which just so happens to be the style of the World Wide Web. It is not a standard, but needs and shapes standards. Interoperability depends on standards, whether your architecture is RESTful or not. Paul Prescod, who I'm quoting often these days, enumerates the necessary kinds of standards:

In application-level networking, there are three basic things to be standardized

Addressing -- how do we locate objects

Methods or Verbs -- what can we ask objects to do

Message payloads or Nouns -- what data can we pass to the objects to ask them to accomplish their goals

For RESTful Web services, the first two are standardized by HTTP/1.1:

The Hypertext Transfer Protocol (HTTP) is an application-level protocol for distributed, collaborative, hypermedia information systems. It is a generic, stateless, protocol which can be used for many tasks beyond its use for hypertext, such as name servers and distributed object management systems, through extension of its request methods, error codes and headers. A feature of HTTP is the typing and negotiation of data representation, allowing systems to be built independently of the data being transferred.

HTTP has been in use by the World-Wide Web global information initiative since 1990. This specification defines the protocol referred to as "HTTP/1.1", and is an update to RFC 2068.

You wanted standards? Even OGC service specifications acknowledge HTTP/1.1 (though not without misusing it, more on that in a future post). And HTTP/1.1 has been shaped by REST.

As Prescod points out, RESTful Web services push all interoperability problems into the third standardization category: message payload. This is a conscious decision. Interoperability may not be perfectly solvable, but you can isolate its problems, and RESTful services should do so. Do read all of Prescod's article on standardization. We spend way too much time talking about the "which" of standards in GIS without really thinking about the "what".

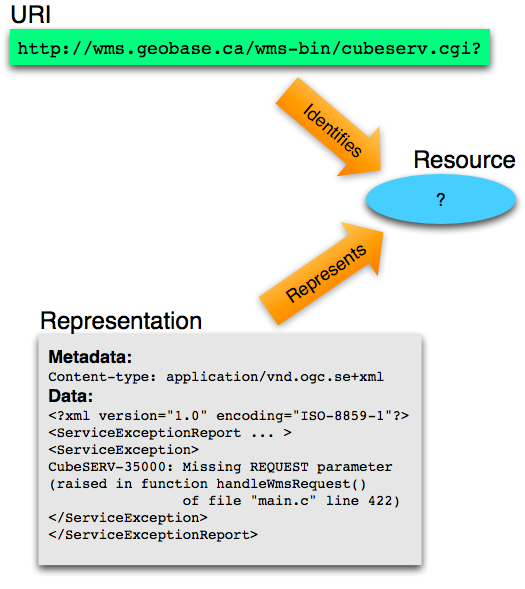

Myth: RESTful Web services aren't "lights out" accessible.

In fact, a properly RESTful service has better accessibility than an OGC service. To use the same analogy, what if you drop your special OGC service client in the dark and can't find it? How do you access your OGC service? Pardon me, but you're screwed: standing knee-deep in other web programming tools, and none of them can make sense of the OGC's unique addressing schemes and unique methods of interaction. With a RESTful service you can poke at it in a standardized way (HTTP/1.1 again) with curl, or XHR, or whatever, and get an actionable description. Of course, you're a GIS geek, and you keep your OGC client firmly attached to a reeling key chain, but consider the other agents on the Web that don't have an OGC service client at all. The OGC's special addressing scheme and methods make it very hard for those agents to get even a partial understanding of the service.

Myth: REST is too immature for GIS.

In 2000, Roy Fielding wrote:

Since 1994, the REST architectural style has been used to guide the design and development of the architecture for the modern Web. This chapter describes the experience and lessons learned from applying REST while authoring the Internet standards for the Hypertext Transfer Protocol (HTTP) and Uniform Resource Identifiers (URI), the two specifications that define the generic interface used by all component interactions on the Web, as well as from the deployment of these technologies in the form of the libwww-perl client library, the Apache HTTP Server Project, and other implementations of the protocol standards.

Web architecture has been done in the REST style since 1994. HTTP/1.1 began to be adopted in 1997. The Web itself goes back to 1990. The OGC's service architecture is not more mature than this.

Myth: RESTful Web services are fine for small solutions, not for large, inter-agency solutions.

The World Wide Web is our largest network application. It's global. Interoperability is promoted through the use of common hypertext formats. One would think that geographic information systems using the same architecture, in the same style, could scale equally well, right? I think the onus is instead on the naysayers to make the case that their favored architectures can scale like the Web and bridge domains like the Web.

Comments

Re: Busting RESTful GIS myths

Author: Paul Ramsey

Some of the "rest isn't ready" myths, related to the "opengis is enterprise ready" one, come from the lack of a complete bundling of restful approaches into a documented implementation profile.

Starting from the premise that "I want to write one client that can read/write features from multiple sources", the OGC answer is simple: use the WFS document, that describes your protocol, and the GML and Filter documents describe your encoding.

The bits that are missing from a unified "REST feature server" specification aren't large... there's lots of encodings, and they can be re-used from the OGC stuff. But there's no specification that would allow us to put a server team and client team in separate rooms and allow them to come out with two pieces of software that talked to one another using REST principles.

Re: Busting RESTful GIS myths

Author: Dave Smith

In recent exhanges via Twitter, I'm afraid the 140char limitation does not always lend itself well to being able to adequately convey meaning, and as such, you may have misinterpreted the points I was trying to make.

I have no problem with REST. Whatsoever. I wholly agree that REST has standards, that it is mature and robust, and that it can and no doubt will serve large, disparate enterprise and cross-agency applications.

My problem is specifically with regard to how *geospatial* data and processing are handled *within* REST, not with REST itself. Currently it's that implementation piece that leaves many questions unanswered. Certainly there are many pieces and parts already existing in the geo world and elsewhere which may lend themselves to handling things like how to handle datums and projections, e.g. EPSG and WKT, how to handle bounding boxes, styling, filtering, temporal slices, and the like, but as of yet, there is not yet any consistent way to do so within geospatial REST implementations - currently there are many disparate approaches to how these are treated in RESTful implementations.

And that's where the challenge in consistent discovery, access and use of geospatial data and processing comes into play in a consistent fashion.

Perhaps the answer may be a standard way of structuring RESTful geo assets, or perhaps it may be a means of conveying to a calling application how to access its capabilities, what is and what isn't supported, or some combination thereof, ala OGC getCapabilities. These are things that are currently implemented and supported in the OGC world, which provide that kind of "lights-out" cross-agency facility of integration.

Re: Busting RESTful GIS myths

Author: Martin Davis

I really want to like REST. I have no particular love for the schema-heavy OGC standards, or the even more complex (and different!) world of SOAP. But for all the apparent simplicity of the REST approach, I just don't see how it provides the required level of self-description required for true discovery and inter-operability.

For instance, I don't think you have shown how REST addresses "Application-Level Network Requirement #3 - Message Payloads or Nouns". Where is the meta-schema that describes the allowable syntax of URL and message payloads?

Also, I think there should be a 4th requirement: "Response Payloads - what we expect an object to tell us". And this also needs a meta-schema to describe its syntax.

So basically I disagree with the statement that REST allows a higher degree of "lights-out" interoperability than the OGC standards. If you know that an endpoint is OGC-W*S-compliant, you can trace through it in a automated fashion and discover exactly what syntax is allowed for all requests and responses. AFAIK the same is NOT true for REST.

And this probably all comes back to your opening point - REST is an architecture, not a standard. And that's fine - having architectural patterns is a good thing. But for REST to be anything more than a debating point, it needs to have some clear standards defined around it.

Re: Busting RESTful GIS myths

Author: Sean

Martin, URLs are opaque, navigability concerns are pushed onto the formats plate (#3) and are dealt with using standard hypertext formats like Atom, KML, or GML (xlink). That's the strategy, and I'll concede that it takes some getting to know.

AtomPub is a nice model for the structured protocols we GIS people crave. An AtomPub service document looks a bit like an OGC service capabilities document, but doesn't have to concern itself with resources methods because all resources have the same methods. All interop issues are dealt with as format issues, and so the service doc merely specifies the allowable content types for collections (think layers or feature types).

KML hints at more organic RESTful GIS design.

Re: Busting RESTful GIS myths

Author: Jason Birch

I personally believe that what we really need are some good solid RESTful GIS implementations (like ESRI's and hopefully MapGuide's) to flush out problems and limitations, long before even thinking about standards.

If practice determines that standards are required to make REST accessible to GIS clients, then they should come after this initial proving stage, should only specify what is absolutely required, and should refer to general internet standards where possible rather than writing a GIS one-off. Codifying things that don't need to be codified--such as the link relationship restrictions in the OGC KML spec--may make application developers' lives easier, but they also cripple the power of the architecture.

As an example of a problem: when working a way to present the HTML representation of the Nanaimo data, the thing that we found most difficult was making our query capabilities discoverable. OpenSearch goes part of the way, as do standard HTML forms, but neither have the ability to describe complex multi-term attribute and spatial query capabilities. Without this layer, the query capabilities are not truly discoverable, and all implementations require clients with special knowledge.

Is the best place to fix this with an OGC standard? I don't think so. What I think is really needed is to work to ensure that our needs are met by existing practices such as OpenSearch or URI Template. Then, once these components are in place and only if absolutely required, OGC can publish a profile that says "use this from here, that from there, BBOX means this DE9-IM relationship, etc".

As a side note, I haven't digested URI Template to the level that I'm comfortable that it can describe something like (a=1 or (a=2 and b=3)).

Re: Busting RESTful GIS myths

Author: Martin Davis

Sean,

Ok, URLs are opaque, and they are provided by documents presumably obtained by some previous query. And (per Jason's comment) that query is specified using something like OpenSearch.

What do you mean that "KML hints at a more RESTful design"?

For me it would help to crystallize this to see an implemented, specified example of this technology mix being used for non-trivial spatial query and retrieval. Eg. something that provides equivalent capabilities to WFS (rich query, multiple feature classes, etc), and which is well-specified enough to allow generic tools to be developed to use it. Is there anything like this out there?

@Jason: I agree that's it's nice to have practice drive standards. What I wonder about is where is the centre of gravity that is going to make the various experiments coalesce into a clear, effective standard. You mention ESRI and MapGuide. And then you mention OpenSearch and URI Template. Are ESRI and MapGuide working towards those specifications? Or are they simply doing their own thing?

Another thing that strikes me: most of this discussion is about query and formats. Am I correct in thinking that those are orthogonal to REST? In which case, it seems to me that the focus on REST is distracting attention from tackling the harder issues.

Re: Busting RESTful GIS myths

Author: Martin Davis

Sean,

Have you seen this?

http://geoserver.org/display/GEOSDOC/RESTful+Configuration+API

This seems like a really thorough proposal to access to both GeoServer configuration information and the underlying spatial data.

But I'm confused about something. The proposal seems to fundamentally depend on the URLs being NON-opaque. Does this mean it is not in fact RESTful? Would it be better designed with opaque URLs? And if so, how would this work

Thanks for assisting my efforts to understand REST and its ramifications...

Re: Busting RESTful GIS myths

Author: Sean

Yes, Martin, GeoServer's API seems (I don't have an instance handy to test) to be lacking the hypertext constraint, and therefore isn't technically RESTful. One possible remedy would be to formalize their URI construction rules and serve them up in a discovery doc like the one specified for the OpenSocial API (search for "5. Discovery"):

http://www.opensocial.org/Technical-Resources/opensocial-spec-v081/restful-protocol

Also blogged about at:

http://www.abstractioneer.org/2008/05/xrds-simple-yadis-uri-templates.html

If the discovery doc is treated like a web form, coupling between clients and servers can be minimized, and servers retain the freedom to evolve by changing the container paths in the URI templates.

A GeoServer instance won't have millions of entities, so could probably also use an index doc in every container with explicit links to its children, as in AtomPub.

I said a KML application would be more "organic", placemarks linking to placemarks in an unstructured way.

Re: Busting RESTful GIS myths

Author: Martin Davis

Re the OpenSocial/URI Template stuff - great, this is what I like to see - clear, formal-enough specification documents which clearly lay out the interactions and data formats required to get a service to function!

This is what I'd hope to see to explain how REST can be used as a substitute for W*S. This stuff is just too complicated IMHO to be understandable via blog posts, or even appealing-but-limited examples.

Speaking of which, I get the same feeling from reading these documents that I had watching XML-RPC spiral into the murky depths of SOAP. It started out as real simple back-of-a-napkin concept, but as people started to realize what was required to make things formally-specified, discoverable, toolable, etc. it turned into a tottering edifice of obscure spec documents which only major projects could hope to boil down into code.

I think there might be an irreducible minimum of information required to support heterogeneous distributed communication. The only way to simplify it is to settle on one protocol which is so widespread and so well supported that it becomes a no-brainer to use. The obvious examples are TCP/IP and HTTP. Neither is trivial, but nobody writes their own TCP/IP or HTTP drivers - they use widely available industrial-strength ones.

Re: Busting RESTful GIS myths

Author: Ryan Baumann

I think where a lot of those REST myths come from is somewhere along the line a lot of people started saying they had a "REST API" which was really just RPC with idiosyncratic XML (but not XML-RPC) over HTTP GET (not POST or anything else, though usually with clean, but undiscoverable, URLs). Of course, this is hardly a new observation, I just thought it may be worth pointing out - just because an API calls itself RESTful does not mean it is a RESTful API. Unfortunately, the only real way to combat this is with true RESTful APIs in practice, so that the advantages can be demonstrated (and hopefully illustrate the practical differences with APIs which just claim to be RESTful as well).

Re: Busting RESTful GIS myths

Author: Sean

Thanks, everyone, for the comments. I hope you've appreciated my attempt to make commenting here suck a bit less. A related thread has started on the geo-web-rest google group and you might want to join if you're interested in helping push RESTful GIS forward.