This morning Howard Butler announced an upcoming Spatialindex 1.6.0 release:

http://lists.gispython.org/pipermail/spatialindex/2010-September/000232.html,

to be followed by an Rtree release. I'm becoming more interested in seeing

libspatialindex currently packaged for Linux distributions, particularly

Ubuntu. Lucid has 1.4.0. I wonder if I can parlay co-working with a

Launchpad developer into a fast track for 1.6.

After a long time away I'm using Rtree (0.6) in anger this week. I love what

Howard and Brent Pederson have done with the code and docs. Try out

>>> from rtree import Rtree

>>> help(Rtree)

and you'll see what I mean. I've also uploaded new HTML docs (by Howard, using

Sphinx). Let us know if you find any errors or unclear directions.

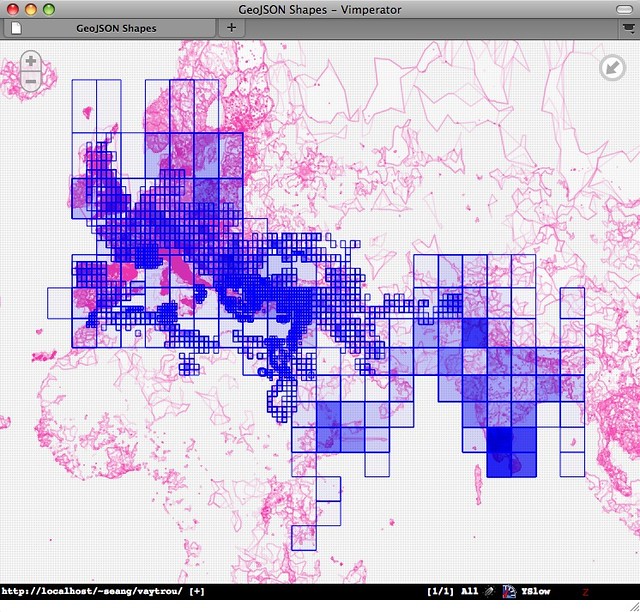

My own Rtree-using project is a simple, batching index for GeoJSON

feature-like objects. Deserialized GeoJSON goes in, deserialized GeoJSON comes

out. The interface is shown by this base class [indexing/__init__.py#L45]:

class BaseIndex(object):

"""Base class for indexes

Deals in GeoJSON-like dicts we will call ``items``, like:

{'id': 'db441f41-ec34-40fb-9f62-28b5a9f2f0e5', # required

'bbox': (0.0, 0.0, 1.0, 1.0), # recommended

'geometry': # required

{'type': 'LineString', 'coordinates': ((0.0, 0.0), (1.0, 1.0))},

'properties': { ... } # optional

... }

"""

def intid(self, item):

"""Return a unique integer id for the item"""

raise NotImplementedError

def bbox(self, item):

"""Return a (minx, miny, maxx, maxy) tuple for the item"""

return bbox(item)

def intersection(self, bbox):

"""Return an iterator over items that intersect with the bbox"""

raise NotImplementedError

def nearest(self, bbox, limit=0):

"""Return an iterator over the nearest N=limit items to the bbox"""

raise NotImplementedError

def index_item(self, itemid, bbox, item):

"""Index item using unique integer itemid and bbox as key"""

raise NotImplementedError

def unindex_item(self, itemid, bbox):

"""Unindex the item with (itemid, bbox) as key"""

raise NotImplementedError

Some of you (2 people, tops) will recognize this from zope.catalog. My first

implementation is an index that uses an instance of Rtree as a forward mapping

and an instance of OOBTree.OOBTree (from the ZODB package) as a backward

mapping (item values and id/bbox keys) [vrtree/__init__.py#L10].

class VRtreeIndex(BaseIndex):

"""An index with an R-tree as the forward mapping and a B-tree as the

backward mapping

Modeled after the example at http://docs.zope.org/zope.catalog/index.html.

"""

def clear(self):

self.fwd = Rtree()

self.bwd = OOBTree.OOBTree()

def __init__(self):

self.clear()

def intid(self, item):

"""Rtree requires unsigned long ids. Python's hash() yields signed

ints, so we add 2**32 to hashed values for the former."""

return hash(item['id']) + OFFSET

def intersection(self, bbox):

"""Return an iterator over Items that intersect with the bbox"""

for hit in self.fwd.intersection(bbox, True):

yield self.bwd[(hit.id, tuple(hit.bbox))]

def nearest(self, bbox, limit=1):

"""Return an iterator over the nearest N=limit Items to the bbox"""

for hit in self.fwd.nearest(bbox, limit, True):

yield self.bwd[(hit.id, tuple(hit.bbox))]

def index_item(self, itemid, bbox, item):

"""Add an Item to the index"""

if (itemid, bbox) in self.bwd:

self.unindex_item(itemid, bbox)

self.fwd.add(itemid, bbox)

self.bwd[(itemid, bbox)] = item

def unindex_item(self, itemid, bbox):

"""Remove an Item from the index"""

value = self.bwd.get((itemid, bbox))

if value is None:

return

del self.bwd[(itemid, bbox)]

self.fwd.delete(itemid, bbox)

I think this is the first time I've ever written code using a tuple as a

mapping key, but it's a perfect fit for an R-tree that takes a tuple of integer

id and bounding box as a key. This index will be given a persistence wrapper

and fronted by a Tornado based service that accepts JSON data and dispatches

to index methods. A "Spatial Solr", if you will, which I attempt to describe in the README.

That's not the only possible implementation. One that needed to run on App

Engine (for example), where there's no C-dependent Rtree, might use the App

Engine datastore for backward mappings, and load an instance of

pyrtree.RTree from the former on startup; the mapping serving as persistence

for an R-Tree that doesn't have its own persistence mechanism.

Naming projects gets harder and harder. "Vaytrou" is a verlan play on the verb

trouver (to find). "Vétrou" would be more authentic, but GitHub refused.

Update (2010-09-17): Here's the Vaytrou server. Simple. Handlers to accept batches of items and perform queries are in the works.

Update (2010-09-17): Spatialindex 1.6.0 is now available from links at http://trac.gispython.org/spatialindex/wiki/Releases.

Comments

Re: GDAL Web driver

Author: Christopher Schmidt

Hey, I recognize some of that text.

I'm not really sure how your second quote addresses my actual "I don't understand" section (which is terribly worded, bad on me for writing under the influence of sudafed). The HTTP spec surely describes byte ranges as meaningful, but does that mean that accessing things via byte ranges is RESTful?

Re: GDAL Web driver

Author: Sean

I should edit my post to make it clear that the second quote isn't meant to be a rebuttal or counterpoint to your first one. Partial representations aren't part of "textbook" REST, but aren't inconsistent with it.