GeoJSON website refreshed

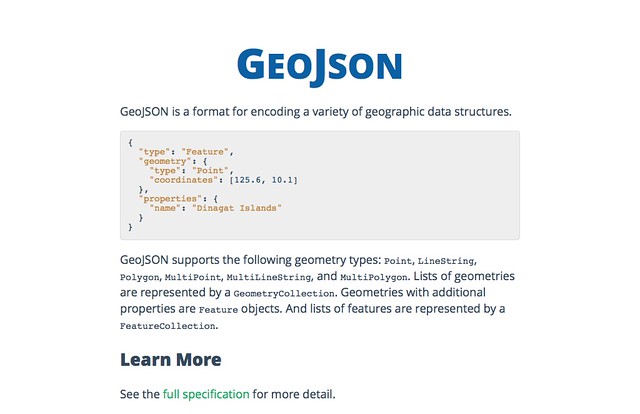

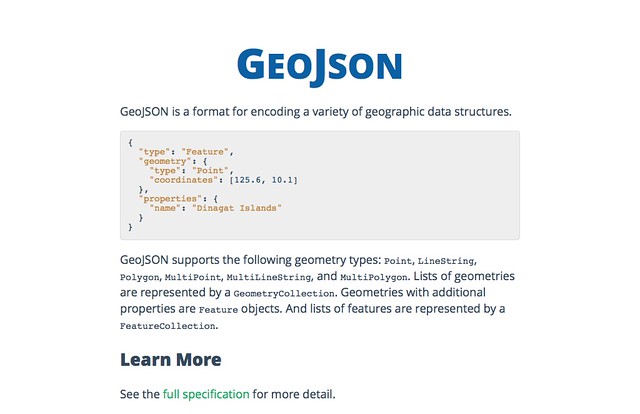

It's colorful and flat and responsive and more readable... it's the new http://geojson.org. And still one hundred percent Word and PDF-free HTML.

It's colorful and flat and responsive and more readable... it's the new http://geojson.org. And still one hundred percent Word and PDF-free HTML.

Tim Schaub has acquired the geojson organization on GitHub from Jason Roebuck (thanks, Jason!) and moved the old GeoJSONWG org to https://github.com/geojson. If you've cloned any of the repos, adjust your remotes accordingly. More good GeoJSON news coming soon.

Ever since I wrote Fiona, I've been asked if I have plans to do something similar for geospatial raster data. Having been out of the raster business for a few years, I always said "no serious plans, just blue-sky ideas." Today, I'm back in satellite image processing and very much want and need something like Fiona-for-rasters. Rasterio is my attempt to write such a Python package.

Whereas Fiona is about reading and writing GeoJSON-like objects from and to vector data files, rasterio exists to let Python programmers read and write Numpy arrays (or other array-like objects providing the PEP 3118 buffer interface) from and to raster data files. I intend rasterio to be fast and gotcha-free and let programmers do the things they do now with osgeo.gdal, but with familiar Python idioms and much less code.

I've just uploaded to PyPI rasterio 0.2. Share and enjoy.

Briefly, here's how you use rasterio to get the dimensions, number of bands, indexes of the bands, coordinate reference system, and coordinate transform matrix from a GeoTIFF.

>>> import rasterio >>> with rasterio.open('rasterio/tests/data/RGB.byte.tif') as src: ... print(src.width, src.height) ... print(src.count) ... print(src.indexes) ... print(src.dtypes) ... print(src.crs) ... print(src.transform) ... (791, 718) 3 [1, 2, 3] [<type 'numpy.uint8'>, <type 'numpy.uint8'>, <type 'numpy.uint8'>] {u'units': u'm', u'no_defs': True, u'ellps': u'WGS84', u'proj': u'utm', u'zone': 18} [101985.0, 300.0379266750948, 0.0, 2826915.0, 0.0, -300.041782729805]

Rasterio subscribes to PROJ.4 style dicts for coordinate reference systems, as Fiona does. The indexes attribute contains the indexes of all image bands, and those are used to access bands and analyze them like so.

>>> with rasterio.open('rasterio/tests/data/RGB.byte.tif') as src: ... npixels = src.width * src.height ... for i in src.indexes: ... band = src.read_band(i) ... print(i, band.min(), band.max(), band.sum()/npixels) ... (1, 0, 255, 29.94772668847656) (2, 0, 255, 44.516147889382289) (3, 0, 255, 48.113056354742945)

Rasterio is written using Cython, so it's fast and has Python 2/3 compatibility. I'm testing with Python 2.7 and Python 3.3 and have it deployed on Python 2.7 at work (where the benchmarks are very positive). I hope you'll find rasterio interesting and follow or fork.

As announced on the company blog, I'm now working at MapBox. I'm excited about this. I'm more than excited. My 8 year-old thinks she knows what excitement is, but dear child, this is something else. Working on bigger things with all the talented and thoughtful folks at MapBox is going to be fun.

Is the transition from academic to commerical work going to be hard? I've worked at fast-paced companies before – experience that definitely factored into my being hired by the AWMC – and I'm happy to be back in this environment. And because I'll be continuing to work on open source geospatial software, data, and protocols, I feel like my favorite academic communities win from having me on the mersh side of things.

What am I going to be working on? A bunch of different things, but my near future involves pixels. Gazillions of pixels. MapBox uses Shapely and Fiona already, so I'll get to stay involved with those projects.

Will I be moving to Washington, D.C. or San Francisco? No, I'm mainly going to work from Fort Collins, but I'll be at one of the offices regularly. I hope to persuade people that the Fort wouldn't be a terrible place to visit for sprints, either. Relatively cheap accomodations and food and plenty of nice beers to meet.

Thanks, everyone, for the overwhelming congratulations.

I've blogged a few times ( here and here) about a pattern I've developed for mapping feature data associated with a web page. Lyzi Diamond wrote a great post about this pattern a few weeks ago: http://lyzidiamond.com/posts/osgeo-august-meeting/. I'm thrilled that it's catching on a bit. The pattern is super simple, only three short paragraphs are needed to describe it.

Let's say I have a collection of web pages. Each is about a particular batch of features. http://sgillies.github.io/syriaca/, for example, is a demo of a page about Syriac places in antiquity. It bears a map in which the places are rendered.

The features to be rendered in the map are obtained by making an HTTP request for a GeoJSON resource. In my case http://sgillies.github.io/syriaca/syriaca.json is fetched using jQuery.getJSON().

var map = L.map('map'); var geojson = L.geoJson().addTo(map); $.getJSON(geojson_uri, function (data) { geojson.addData(data); });

The feature data is not written into a script in the page as they are in http://leafletjs.com/examples/quick-start-example.html. Yes, this means an extra HTTP request, but one that can be ansynchronous and cacheable.

Here's the crux of the pattern: the URI of that GeoJSON resource bound to the geojson_uri variable is specified not in the map script, but in a link in the head of the page.

<link rel="location" type="application/json" href="syriaca.json"/>

That URI is found by its "location" relation type.

var geojson_uri = $('link[rel="location"]').attr("href");

What's gained by having my Javascript follow a link to get GeoJSON features? Why not just write the features into a script? I've developed this pattern because it decouples the map from the data and lets me generalize the mapping script so I can reuse it across many pages, and also because I'm not thinking just about the slippy maps in the page. I'm keeping the bigger web in mind.

If the Javascript in my page was my only consideration I'd just embed the features in a script and maybe not use GeoJSON at all. But a resource like http://sgillies.github.io/syriaca/ isn't just a page with a slippy map, it's a starting point for other web map making software. The links are also for web clients written by people other than me.

Given the page's URL, a Python web client can get the same GeoJSON in the same way.

from bs4 import BeautifulSoup import requests r = requests.get('http://sgillies.github.io/syriaca/') soup = BeautifulSoup(r.content) points = [t for t in soup.find_all('link') if 'location' in t['rel']] print points # Output: # [<link href="http://sgillies.github.io/syriaca/syriaca.json" rel="location"/>] for p in points: s = requests.get(r.url + p['href']) json = s.json() print json['type'], len(json['features']) # Output: # FeatureCollection 955

The GeoJSON URI is much easier to find in a link by its relation type than by scraping it from a script (were I to use a literal in my first code example above instead of a geojson_uri variable). There's a IETF draft written by James Snell proposing standardization of the "location" relation: http://tools.ietf.org/html/draft-snell-more-link-relations-01. My pattern becomes much more useful with such a standard link relation.

Does this pattern avoid the browser's same-origin policy as Lyzi suggests in http://lyzidiamond.com/posts/external-geojson-and-leaflet-the-other-way/? As far as I know, links with rel="stylesheet" are the only ones that a browser fetches unprompted. The GeoJSON fetched by XHR is still subject to same-origin policy.

Welcome to my new blog. It's made with Tinkerer. I'm calling it a downgrade because there's no more database, no more comments, no more Python objects published for every request. It's just rsync and static HTML generated by Tinkerer and Sphinx from ReStructured Text docs I type into Vim. There are moving parts in this new blog, but they are all on my laptop. None of them are deployed to a web server.

My old posts were all written using ReST, so migrating is going to be easy peasy. Until I get around to it, old posts will be at http://sgillies.net/olden-days-blog/ and I'll make sure the old URLs redirect.

Version 1.0.2 is tagged and uploaded to PyPI. The changes:

GeoPandas is driving half of these changes. Thanks, Kelsey! The others are GeoJSON format related. Dumpgj is a program that does much of what ogr2ogr does, and with more natural ordering of options and parameters. Because stdout is the default for dumpgj, it's a good fit for Tom MacWright's geojsonio-cli. From my Fiona repository, this

$ dumpgj docs/data/test_uk.shp | geojsonio

opens http://geojson.io in my browser with the GeoJSON carried in a application/json type data URI. You can do the same thing with ogr2ogr, but I think the above is a bit more tidy than this:

$ ogr2ogr -f GeoJSON /vsistdout/ docs/data/test_uk.shp test_uk | geojsonio

After 5 years, I'm leaving ISAW and NYU. Friday (the 13th!) is my last day. It's been a tremendous privilege to work here, work largely funded by the National Endowment for Humanities (and thereby many of you dear readers), but I can no longer deny my itch to get back to my professional passions: maps and mapping data for the web. Building classics research infrastructure and trying to change scholarly communication has been very interesting, but in all honesty, I'd rather be making better maps and better mapping infrastructure.

On Monday I'll be just a normal user of Pleiades and no longer responsible for its day to day operation. Leaving the engine room will be a big change for me. Looking back, I'm very proud of what I've helped the Pleiades community accomplish. We built a framework for correcting and extending the Barrington Atlas gazetteer and provided a spatial foundation for future digital classics projects. We were pioneers in microattribution. The idea of URIs for classical concepts that we championed is now entirely mainstream, as is the idea of interacting with classical resources using HTTP and REST. Development in the open, with open source licensing, public source repositories, and public mailing lists is now the norm. We took the public funding of Pleiades very seriously and spun out useful open source GIS software like Shapely and Fiona. And the GeoJSON format has roots in Pleiades, maybe you've heard of it?

I'll miss being paid to work at one of the hubs of collaboration between all the wonderful folks at the Alexandria Archive, American Numismatic Society, Ancient World Mapping Center, Duke Collaboratory for Classics Computing, Epigraphic Database Heidelberg, Kings College (London) DH, Pelagios, and Portable Antiquities Scheme. It's been an honor, everybody. Thank you.

Of all these collaborations, it's the one with Tom Elliott, my boss, that I'll miss the most. NYU is a big company and some things that go down at big companies are less than ideal, but he has been the ultimate firewall; bureaucratic nonsense never leaked through into my work life. Tom's the glue that holds the digital classics field together and his vision of it makes positions like mine (no Classics Ph.D. required!) possible. Most of all, he's my friend. Thanks, Tom.

I've got 2 more days of documentation sprinting ahead of me and then I'll be available for new work.

I tried to say no to every extraneous feature on the road to 1.0. I also overlooked at least one important feature: control over the order of record fields when writing data files.

The fields of a Collection (the object you get when you call fiona.open()) are expressed as a mapping. But Python's standard mapping implementation, the dict, is not predictably sorted:

Keys and values are iterated over in an arbitrary order which is non-random, varies across Python implementations, and depends on the dictionary’s history of insertions and deletions.

and so in Fiona 1.0 there was a very probable mismatch between the output of the ogrinfo program and Collection.schema['properties']. Where ogrinfo reports ['CAT', 'FIPS_CNTRY', 'CNTRY_NAME', 'AREA', 'POP_CNTRY']

$ ogrinfo docs/data/test_uk.shp test_uk -so INFO: Open of `docs/data/test_uk.shp' using driver `ESRI Shapefile' successful. Layer name: test_uk Geometry: 3D Polygon Feature Count: 48 Extent: (-8.621389, 49.911659) - (1.749444, 60.844444) Layer SRS WKT: GEOGCS["GCS_WGS_1984", DATUM["WGS_1984", SPHEROID["WGS_84",6378137,298.257223563]], PRIMEM["Greenwich",0], UNIT["Degree",0.017453292519943295]] CAT: Real (16.0) FIPS_CNTRY: String (80.0) CNTRY_NAME: String (80.0) AREA: Real (15.2) POP_CNTRY: Real (15.2)

the schema properties may be ordered differently by Fiona 1.0.

>>> list({ ... 'CAT': 'float:16', ... 'FIPS_CNTRY': 'str', ... 'CNTRY_NAME': 'str', ... 'AREA': 'float:15.2', ... 'POP_CNTRY': 'float:15.2'}.keys()) ['POP_CNTRY', 'CNTRY_NAME', 'CAT', 'AREA', 'FIPS_CNTRY']

I've changed this in 1.0.1 so that the schema properties are now an ordered dict with items ordered exactly as read by OGR.

>>> import pprint >>> with fiona.open('docs/data/test_uk.shp') as c: ... pprint.pprint(c.schema['properties']) ... {'CAT': 'float:16', 'FIPS_CNTRY': 'str', 'CNTRY_NAME': 'str', 'AREA': 'float:15.2', 'POP_CNTRY': 'float:15.2'}

To write a file with fields in a certain order, you must provide them as an ordered dict

from collections import OrderedDict schema_props = OrderedDict([('bar', 'int'), ('foo', 'str')]) c = fiona.open( '/tmp/file.shp', 'w', schema={'properties': schema_props, ...}, ... )

or a list (which gets converted to an ordered dict internally).

c = fiona.open( '/tmp/file.shp', 'w', schema={'properties': [('bar', 'int'), ('foo', 'str')], ...}, ... )

Heads up: creating an OrderedDict from a dict exposes you to the same uncertaintities as using a dict: the ordering is determined by the order of the dict's items, not by the order they appear in the Python expression.

>>> list(OrderedDict({'foo': 'str', 'bar': 'int'}).keys()) ['bar', 'foo']

Author: Alban

Summer is over and I try now to use Fiona 1.0 in Python 2.7 with gdal 1.9 on Windows 32bits (binaries are from http://www.lfd.uci.edu/~gohlke/pythonlibs/#fiona). I've got a error "ImportError: DLL load failed" when python import Fiona. It seem that ogrext.pyd try to load systematically gdal110.dll (same issue on gis stackexchange : http://gis.stackexchange.com/questions/67372/fiona-importerror-dll-load-failed).

Is this a problem in Fiona or in the windows binaries from www.lfd.uci.edu/~gohlke?

Author: Sean

Gohlke's Fiona binary requires his GDAL binary. It won't work with any other.

Recently, Tom MacWright announced geojson.io, an app for "drawing, changing, and sharing GeoJSON-formatted map data." My colleague Eric Kansa has used it to map finds at Poggio Civitate: http://geojson.io/#6176329. It's cool to see GeoJSON finally catching on in the archaeology/history/humanities circles where it has some roots. Wednesday, GitHub announced that GeoJSON Gists now get the same maps as GeoJSON files in repositories.

The GitHub announcement was bigger news, but I'm more interested in geojson.io. I'd love to see the interface reused in Pleiades somehow, someday.