One of the key constraints of a RESTful web services is adherence to hypertext as the engine of application state, or as Bill de hÓra says, links in content. AtomPub has this: service documents link to collections; collections link to entries; entries link to their editing resource. Why? For resiliency, evolvability, and longevity. Links in content allow clients and servers to be decoupled; an agent can follow its nose into the service and its contents, and need not be compiled against the service. The service is more free to add resources, proxy/cache resources, move resources, phase out resources. In theory, the properties of resiliency, evolvability, and longevity are products of the hypertext constraint. This theory is continually tested, and mostly validated, day after day, year after year, on the Web. Roy Fielding wrote in a comment on his blog:

REST is software design on the scale of decades: every detail is intended to promote software longevity and independent evolution. Many of the constraints are directly opposed to short-term efficiency.

If your services aspire to the level of infrastructure, links in content is a better architectural style than one where all clients break when the API changes, or that demand a client upgrade to get access to any new capabilities.

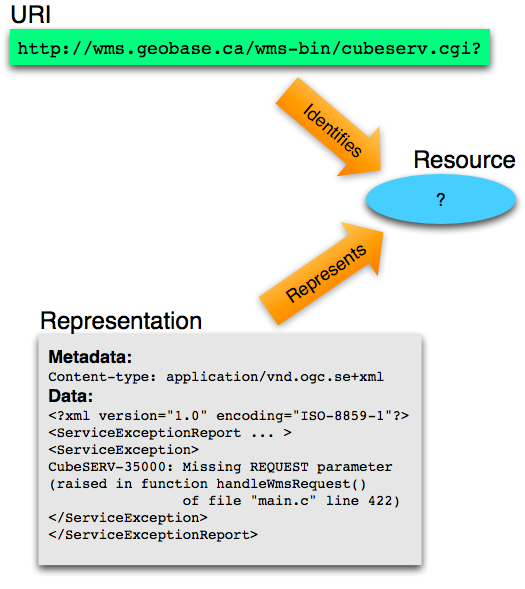

Service developers often mistake hierarchical URIs with the hypertext constraint. An API with URIs like http://example.com/api/food/meat/red looks clean, but unless there's a resource at http://example.com/api/food/meat/ that explicitly connects – whether using links, forms, or URI templating – clients to the resource at http://example.com/api/food/meat/red (and it's sibling "white"), it's only a cosmetic cleaning. The API might as well use http://example.com/api?tag=food&tag=meat&tag=red. I pointed out the lack of links in the very handy New York Times Congress API on Twitter and got a response from a developer. I assert that for the API to be RESTful, there should be links to subordinate "house" and "senate" resources in the response below instead of a server error:

$ curl -v "http://api.nytimes.com/svc/politics/v2/us/legislative/congress/103/?api-key=..."

> GET /svc/politics/v2/us/legislative/congress/103/?api-key=... HTTP/1.1

> Host: api.nytimes.com

>

< HTTP/1.1 500 Internal Server Error

< Content-Type: application/xml; charset=utf-8

< Content-Length: 279

<

<?xml version="1.0"?>

<result_set>

<status>ERROR</status>

<copyright>Copyright (c) 2009 The New York Times Company. All Rights Reserved.</copyright>

<errors>

<error>Internal error</error>

</errors>

<results/>

</result_set>

One of the best examples of links in geospatial service content is ESRI's ArcGIS sample server. It's entirely navigable for an agent such as a web browser. Agents that follow the links in the content can easily tolerate addition and deletion of services, or their move to new URIs. See also the JSON representation of that same resource, http://sampleserver1.arcgisonline.com/arcgis/rest/services/?f=json:

{

"folders": [

"Demographics",

"Elevation",

"Locators",

"Louisville",

"Network",

"Petroleum",

"Portland",

"Specialty"

],

"services": [

{

"name": "Geometry",

"type": "GeometryServer"

}

]

}

The service doesn't make it clear enough that the items in the "folders" and "services" lists are the relative URIs of subordinate resources, but that's clearly the intention. Nevermind that the ArcGIS REST API is layered over SOAP services; it's very close to getting the hypertext constraint right and worth emulating and improving upon. ESRI is astronomical units beyond the OGC in applying web architecture to GIS. (Note: the JSON format itself has no link constructs, so JSON APIs are on their own. The lack of a common JSON linking construct is a big deal. As I've mentioned before, it prevents GeoJSON APIs from being truly RESTful.)

As Fielding points out, constraining clients to crawl your service, instead of compiling against it, can have a performance cost. On the other hand, clients are welcome to optimize by caching the structure of a service for a time/value specified by the server, using the expiration and validation mechanisms built into HTTP/1.1. The extra cost of crawling need not be paid any more often than necessary.

Finally, consider that you might not even need REST in your API. Seriously, you might not need it. Not every service needs to span many organizations, or support dozens of different clients. Not every service needs to be around for 10, 15, 20 years.

I'm eager to see if the touted GeoCommons API has the hypertext constraint. I'm almost certain it will declare itself to be "RESTful", both because of the zeal of the company's marketing folks, and because its CTO, Andrew Turner, is honestly big on web architecture. If it does, it would be taking a step towards becoming a real spatial data infrastructure.

Update (2009-01-15): I just remembered that Subbu Allamaraju has a related and much more detailed article on describing RESTful applications.

Update (2009-01-20): links are already a requested Congress API feature.